// published on Taylor and Francis: Human–Computer Interaction: Table of Contents // visit site

// published on Taylor and Francis: Human–Computer Interaction: Table of Contents // visit site

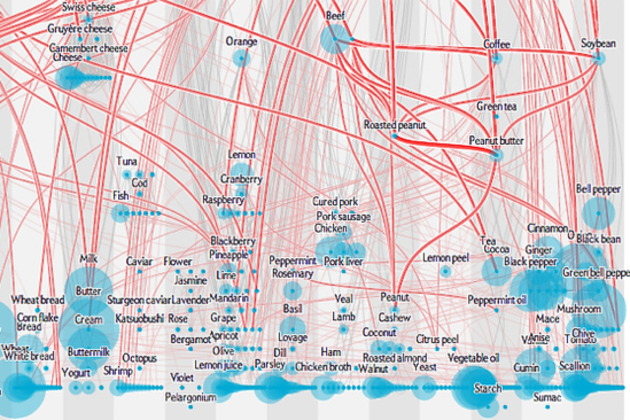

Some tastes just fit together perfectly — but why? This month, Scientific American tackles the question with an interactive chart, combining chemical analysis of 381 ingredients with data from over 50,000 recipes. The red lines indicate a shared chemical compound, like the common sugars between an apple and a glass of white wine. The analysis also unearths less expected links, like a surprising number of shared compounds between soybeans and black tea. It's a matter of taste whether those common compounds actually make the foods taste better together, but there's reason to think they do. The underlying research finds that, in European cuisine at least, chefs tend to pair flavors based on shared chemistry.

One difference I find striking between artists and scientists is the way in which these two groups deal with uncertainty. My scientist friends and colleagues are usually determined to ‘pin down’ the uncertainty in their models, define …

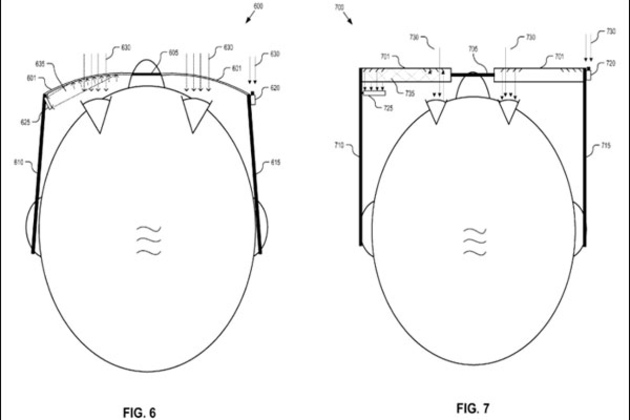

Advertisers spend heaps of cash on branding, bannering, and product-placing. But does anyone really look at those ads? Google could be betting that advertisers will pay to know whether consumers are actually looking at their billboards, magazine spreads, and online ads. The company was just granted a patent for "pay-per-gaze" advertising, which would employ a Google Glass-like eye sensor in order to identify when consumers are looking at advertisements in the real world and online.

From the patent application, which was filed in May 2011:

Pay per gaze advertising need not be limited to on-line advertisements, but rather can be extended to conventional advertisement media including billboards, magazines, newspapers, and other forms of...